In this post I'd like to show you some Kernels and their effects on the image and how to use modern OpenGL for Image Post-processing.

What you already need to know before advancing in image postprocessing. You have to understand how Framebuffers work, and how to render a Scene to a Texture.

Now you need a Shader to display the Texture. One simple shader to just displaying a 2d Texture looks something like this:

Vertex Shader:

layout (location = 0) in vec3 in_position;

layout (location = 1) in vec3 in_normal;

layout (location = 2) in vec2 in_texture_coords;

//OUTS

out vec3 v_normal;

out vec3 v_pos;

out vec2 v_uv;

void main() {

v_uv = in_texture_coords;

v_normal = in_normal;

gl_Position = vec4(in_position, 1.0);

}

The Vertex Shader just passes things through.

Fragment Shader:

layout(location = 0) in vec3 v_pos;

layout(location = 1) in vec3 v_normal;

layout(location = 2) in vec2 v_uv;

uniform float near_plane;

uniform float far_plane;

uniform sampler2D rendered_texture;

out vec4 frag_color;

void main() {

ivec2 texture_size = textureSize(rendered_texture, 0);

vec2 step_size = 1.0/texture_size;

vec2 offsets[9] = vec2[](

vec2(-step_size.x, -step_size.y),

vec2( 0.0f, -step_size.y),

vec2( step_size.x, -step_size.y),

vec2(-step_size.x, 0.0f),

vec2( 0.0f, 0.0f),

vec2( step_size.x, 0.0f),

vec2(-step_size.x, +step_size.y),

vec2( 0.0f, +step_size.y),

vec2( step_size.x, step_size.y)

);

float kernel[9] = float[](

-1, -1, -1,

-1, 9, -1,

-1, -1, -1

);

vec4 sum = vec4(0.0);

for(int i = 0; i < 9; i++) {

sum += texture(rendered_texture, v_uv + offsets[i]) * kernel[i];

}

sum.a = 1.0;

frag_color = sum;

}

What the code above does: First we need to know what our step_size is. For this we use the OpenGl function textureSize you just give it your sampler2d texture and the LOD, and it gives you back the resolution.

With that we can determine the step size in texture coordinates. And then use this to get the offset from neighboring pixels. This post by Victor Powell explains everything really well.

in our case a is v_uv and as you can see we multiply the color value with the value in the k(i,j) (Kernel) matrix. So basically:

texture(rendered_texture, v_uv + offsets[i]) is the I(x-i + a, y - j + a).

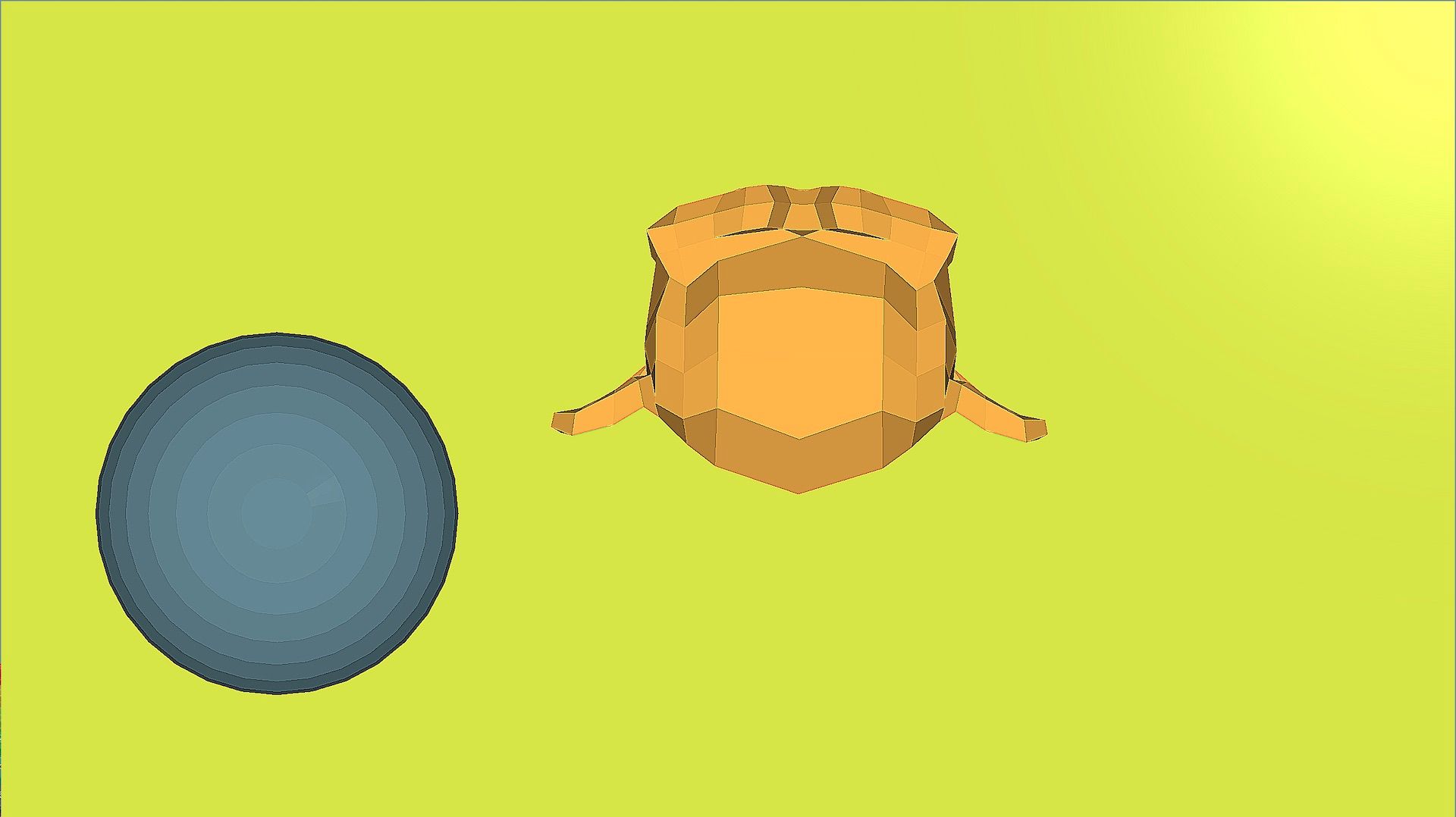

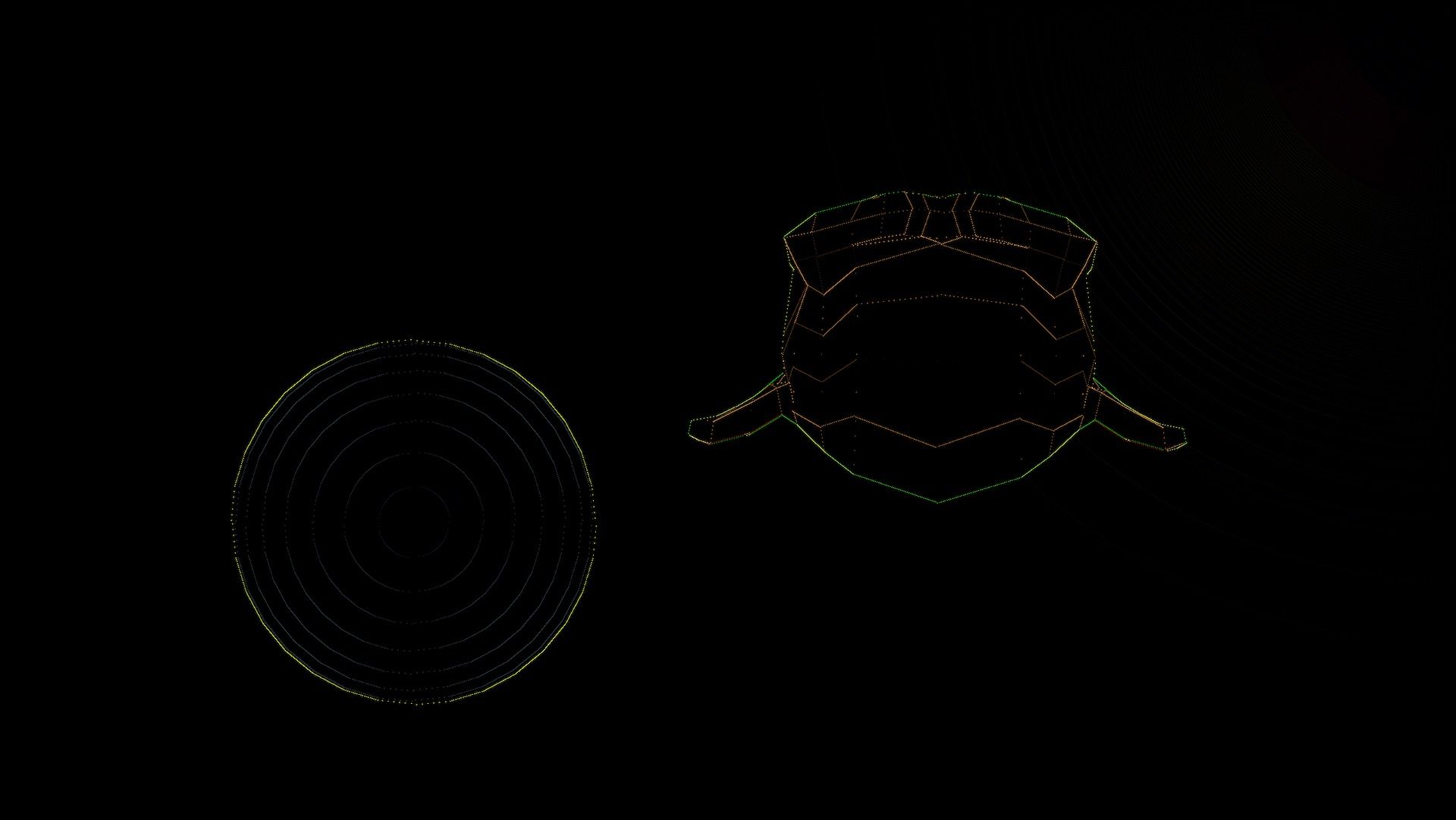

With the Kernel used int the above example we get the following result:

is a simple Edge Detection. You can see in the picture below that the edges are kind of sharpened.

Edge Detection

float kernel[9] = float[](

-1, -1, -1,

-1, 9, -1,

-1, -1, -1

);

If you change the values slightly:

float kernel[9] = float[](

0, -1, 0,

-1, 4, -1,

0, -1, 0

);

Just try it out for yourself in your own shader, it's really funny to see instant results.

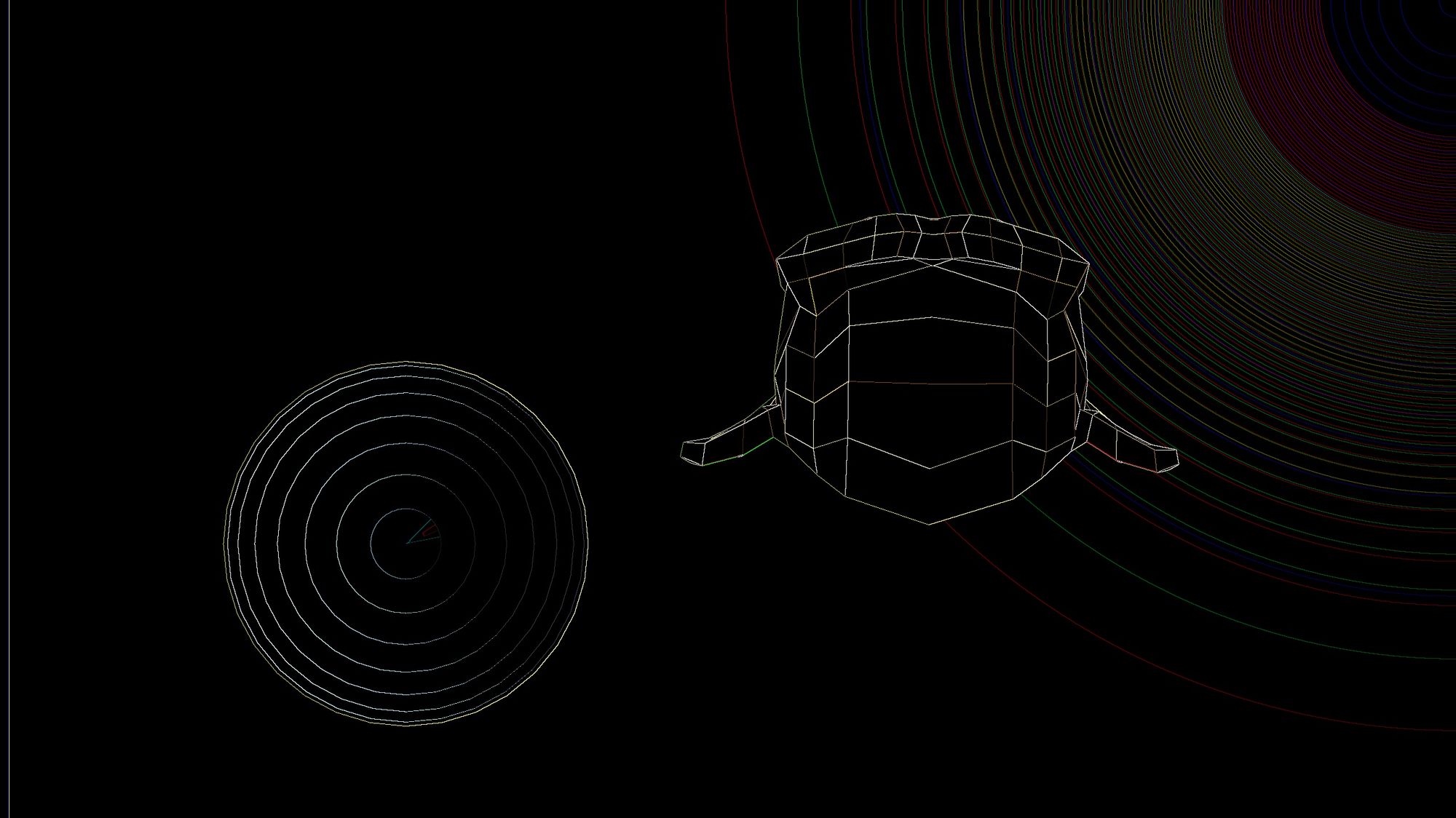

For example the following Kernel:

float kernel[9] = float[](

-20, -1, 0,

-20, 63, -1,

-20, -1, 0

);

results in this funny image:

If you press this Button it will Load Disqus-Comments. More on Disqus Privacy: Link